A few months ago, I was first exposed to GitOps and fell in love with it pretty quickly. I began planning a migration to use Flux from our existing CD process in Azure Pipelines.

Moving our services and tasks over was pretty easy but the problem we ran into was that our existing CD process would deploy the database migrations right before the new pods deployed which you can’t really do directly with Flux.

With Flux, all of your deployments are just standard kubernetes yaml definitions that can be optionally be templated using a tool like kustomize. If you are familiar with EFCore migrations, you know its a bad practice to run migrations at the beginning of app startup. In an environment like Kubernetes, it easy to have multiple pods start at the same time which can cause all of them to try and migrate your DB at once.

Using Helm Pre-Deploy Hooks

It occurred to me that the Helm Pre-Deploy hooks would be perfect for this! During each deploy cycle, you can have a Kubernetes Job run. If it succeeds, the rest of the chart is deployed and the job is removed. If it fails, the chart stops deploying and your existing pods are left as they are.

First thing I did was make changes to host builder code for AspNetCore. If a specific flag (I used -m) is passed into the app startup via the command line, I’ll have it run the migrations and exit.

Here, we basically just build the app like we normally would, but instead of running it, we grab a service scope and use it to get us an instance of our DbContext. Using that instance, you can then call the Migrate() method. When the migration completes, the app then exits.

I chose to add the migrations functionality to my existing app. You can also create a separate image with only the migration functionality in it but it didn’t seem worth it to add an additional image to the build.

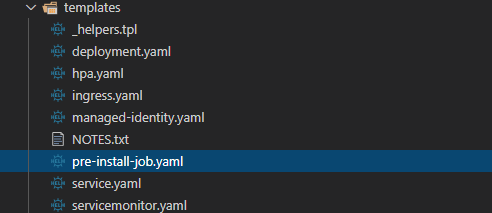

Next thing that needs to be done is to add the yaml for the job itself. It’s very easy to work with. You basically just add a normal kubernetes job with 3 additional annotations.

If you use managed identity to connect to your database (you should be), you will want to create an additional Managed Service Principal that is in the db_owner group of the database you wish to apply the migrations to. If you will never have your migrations move data around and they will only change schema, it might be better to put them in db_ddladmin instead.

When the migration completes, the pod will exit and Helm will be given the green light to move forward with the release.

I was pretty happy with this solution. Let me know in the comments what you think or if you have any questions!

Comments